Building a Layer 7 Load Balancer

Behind every high-performing system lies a load balancer making invisible yet critical decisions—discover how these unsung heroes shape the digital world.

Load balancers are essential for applications or services that handle high volumes of traffic, ensuring that they can scale efficiently to meet demand. Modern load balancers have evolved into comprehensive traffic management solutions, often incorporating rate limiting, caching, DDoS protection, and more, making them indispensable for high-scale systems.

What is a load balancer? #

Imagine you’re at an airport, standing in line for the security check. There is a single line of passengers, but there are five booths with agents checking boarding passes and IDs. At the front of the line, there is an agent directing passengers to available booths. This agent acts like a basic load balancer, distributing incoming requests (passengers) to backend servers (TSA agents) for processing.

A load balancer does more than distribute requests. It also monitors the health of backend servers by periodically sending health checks or heartbeats to ensure they’re functioning properly. Load balancers periodically run health checks so that requests do not get routed to unhealthy servers.

Types of Load Balancers

There are two common types of load balancers:

- Layer 4 Load Balancer: Operates at the transport layer (TCP/UDP), distributing traffic based on IP addresses and ports.

- Layer 7 Load Balancer: Operates at the application layer (HTTP), distributing requests based on more granular details such as URL paths, headers, and even request content.

Popular load balancers include AWS Elastic Load Balancer, NGINX, and HAProxy.

What is a layer 4 load balancer? #

A Layer 4 load balancer operates at the transport layer (OSI Layer 4), distributing network traffic based on information from the TCP/UDP protocol. It does not inspect the application-level data, meaning it forwards packets to the backend servers without interpreting their content. Layer 4 load balancers make decisions based on the destination IP address and port.

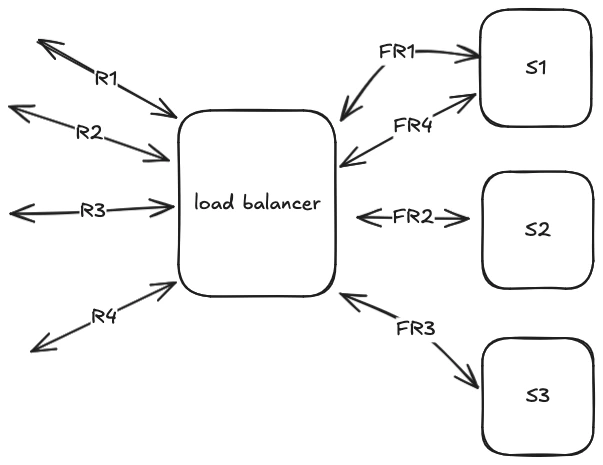

Below is a diagram of a simple load balancer. Requests (R1, R2, R3, R4) are distributed to three backend servers, and the forwarded requests are shown as FR1, FR2, FR3, and FR4.

Diagram of a load balancer

Below is code for a simple load balancer that supports HTTP routes request in a Round Robin fashion.

1package main

2

3import (

4 "fmt"

5 "io"

6 "log"

7 "net/http"

8 "sync/atomic"

9 "time"

10)

11

12type Backend struct {

13 URL string

14 Alive bool

15 Health string

16}

17type LoadBalancer struct {

18 backends []*Backend

19 current uint32

20}

21

22func (lb *LoadBalancer) getNextBackend() *Backend {

23 next := atomic.AddUint32(&lb.current, 1)

24 return lb.backends[next%uint32(len(lb.backends))]

25}

26// Periodically check all backends for health

27func (lb *LoadBalancer) healthCheck() {

28 for _, backend := range lb.backends {

29 go func(b *Backend) {

30 for {

31 resp, err := http.Get(b.URL + b.Health)

32 if err != nil || resp.StatusCode != http.StatusOK {

33 b.Alive = false

34 log.Printf("Backend %s is down\n", b.URL)

35 } else {

36 b.Alive = true

37 log.Printf("Backend %s is up\n", b.URL)

38 }

39 time.Sleep(10 * time.Second)

40 }

41 }(backend)

42 }

43}

44// Proxy forwards the request r to a backend and returns response w

45func (lb *LoadBalancer) proxy(w http.ResponseWriter, r *http.Request) {

46 backend := lb.getNextBackend()

47

48 // Forward request to backend

49 resp, err := http.Get(backend.URL + r.URL.Path)

50 if err != nil || !backend.Alive {

51 http.Error(w, "Backend unavailable", http.StatusServiceUnavailable)

52 return

53 }

54 defer resp.Body.Close()

55

56 // Copies the response back to client

57 for k, v := range resp.Header {

58 w.Header()[k] = v

59 }

60

61 _, err = io.Copy(w, resp.Body)

62 if err != nil {

63 log.Printf("Error copying response from backend: %v", err)

64 http.Error(w, "Error forwarding response", http.StatusInternalServerError)

65 }

66}

67

68func main() {

69 // This can be any list of backends that you have

70 backends := []*Backend{

71 {URL: "http://localhost:8081", Alive: true, Health: "/health"},

72 {URL: "http://localhost:8082", Alive: true, Health: "/health"},

73 }

74

75 lb := &LoadBalancer{backends: backends}

76

77 go lb.healthCheck()

78 http.HandleFunc("/", lb.proxy)

79 fmt.Println("Load Balancer started at :8080")

80 log.Fatal(http.ListenAndServe(":8080", nil))

81}

You can refer to this article which I referred for inspiration when building a simple load balancer.

What is a layer 7 load balancer? #

Layer 7 load balancers operate at the application layer (OSI Layer 7) and are more “intelligent” compared to Layer 4 load balancers. They can inspect request URLs, headers, and even body content, allowing them to make more sophisticated routing decisions based on application-level data.

Key Features of Layer 7 Load Balancers: #

- Routing based on URLs and parameters: They can route requests to specific backend servers based on the request path, query parameters, or headers.

- Sticky sessions: They can ensure that requests from the same user are consistently routed to the same server, which is useful for session persistence.

- Caching: They can cache responses, reducing the need to forward identical requests to the backend servers, which helps improve performance and reduce server load.

While these features provide greater flexibility, they also come at the cost of increased complexity, maintenance, and performance overhead. However, for applications with sophisticated routing requirements, Layer 7 load balancers are often worth the trade-offs.

SSL Termination #

Layer 7 load balancers can perform SSL termination, where encrypted SSL/TLS traffic is decrypted at the load balancer, and plain HTTP requests are forwarded to the backend servers. This offloads the computational burden of encryption from the backend servers and centralizes SSL certificate management.

Here’s how you can add SSL termination to your load balancer using Go:

1package main

2

3import (

4 "crypto/tls"

5 "fmt"

6 "log"

7 "net/http"

8)

9

10func main() {

11 // Load the SSL certificate and key (You need to generate SSL certificates)

12 certFile := "cert.pem"

13 keyFile := "key.pem"

14

15 // Define your LoadBalancer handler

16 lb := &LoadBalancer{}

17

18 // Create an HTTPS server with SSL termination

19 server := &http.Server{

20 Addr: ":8443", // or any other port

21 Handler: http.HandlerFunc(lb.proxy),

22 TLSConfig: &tls.Config{

23 MinVersion: tls.VersionTLS13, // or TLS12 if compatibility is needed

24 },

25 }

26

27 fmt.Println("Load Balancer with SSL started at :8443")

28 // Start the HTTPS server with SSL

29 log.Fatal(server.ListenAndServeTLS(certFile, keyFile))

30}

Key Steps for SSL Termination:

- SSL Certificate: You’ll need to generate or obtain SSL certificates (cert.pem and key.pem). For development purposes, you can use self-signed certificates, but in production, you would obtain certificates from a trusted Certificate Authority (CA).

- TLS Configuration: The TLSConfig allows you to specify the minimum TLS version for security. TLS 1.3 is preferred for modern security standards.

- HTTPS Server: The ListenAndServeTLS method starts the HTTPS server, handling SSL/TLS connections.

Routing requests #

A Layer 7 load balancer can inspect request URLs and headers, allowing it to route traffic to specific backend services based on the request’s path or parameters. This enables the load balancer to route traffic more intelligently. For example, API requests can be routed to different services (e.g., /apiA or /apiB) based on the URL path.

Each backend service typically registers its routes with the load balancer, specifying the paths or criteria that it can handle. The load balancer then forwards the appropriate requests to the right service.

Example: Minimal Request Router in Go

A Layer 7 load balancer can inspect request URLs and headers, allowing it to route traffic to specific backend services based on the request’s path or parameters. This enables the load balancer to route traffic more intelligently. For example, API requests can be routed to different services (e.g., /apiA or /apiB) based on the URL path.

Each backend service typically registers its routes with the load balancer, specifying the paths or criteria that it can handle. The load balancer then forwards the appropriate requests to the right service. Example: Minimal Request Router in Go

Here’s a minimal Go code example that demonstrates routing requests to different backend services using a simple path-based routing mechanism.

1package main

2

3import (

4 "fmt"

5 "log"

6 "net/http"

7 "strings"

8)

9

10type Backend struct {

11 URL string

12}

13

14// RouteTable maps paths to specific backends

15type RouteTable map[string]*Backend

16

17type SimpleRouter struct {

18 routes RouteTable

19}

20

21// NewSimpleRouter initializes a router with predefined routes

22func NewSimpleRouter(routes RouteTable) *SimpleRouter {

23 return &SimpleRouter{routes: routes}

24}

25

26// ServeHTTP implements http.Handler and performs routing

27func (sr *SimpleRouter) ServeHTTP(w http.ResponseWriter, r *http.Request) {

28 // Extract the base path (e.g., /apiA, /apiB)

29 basePath := strings.Split(r.URL.Path, "/")[1]

30 backend, exists := sr.routes["/"+basePath]

31

32 if !exists {

33 http.Error(w, "Service not found", http.StatusNotFound)

34 return

35 }

36

37 // Forward the request to the appropriate backend

38 resp, err := http.Get(backend.URL + r.URL.Path)

39 if err != nil {

40 http.Error(w, "Backend unavailable", http.StatusServiceUnavailable)

41 return

42 }

43 defer resp.Body.Close()

44

45 // Copy backend response back to client

46 for k, v := range resp.Header {

47 w.Header()[k] = v

48 }

49 _, err = http.Copy(w, resp.Body)

50 if err != nil {

51 http.Error(w, "Error forwarding response", http.StatusInternalServerError)

52 }

53}

54

55func main() {

56 // Register routes to backend services

57 routes := RouteTable{

58 "/apiA": {URL: "http://localhost:8081"},

59 "/apiB": {URL: "http://localhost:8082"},

60 }

61

62 router := NewSimpleRouter(routes)

63

64 // Start the HTTP server with the router

65 fmt.Println("Load Balancer started at :8080")

66 log.Fatal(http.ListenAndServe(":8080", router))

67}

Rate limiting #

Rate limiting is an important feature that prevents backend servers from being overwhelmed by excessive requests during high traffic periods. Without rate limiting or similar protection, servers—or the entire service—could experience downtime or degraded performance.

There are various algorithms used to implement rate limiting, each designed to control the flow of traffic in a different way. One common algorithm is the token bucket rate limiter, which allows a limited number of requests per time window. If the number of requests exceeds this limit, excess requests are denied. There are a couple of algorithms in performing rate limiting, you can refer this link to check it out.

Example: Token Bucket Rate Limiter in Go #

Below is a minimal implementation of a token bucket rate limiter. The limiter controls the number of requests allowed in a given time window.

1package main

2

3import (

4 "log"

5 "net/http"

6 "sync"

7 "time"

8)

9

10type RateLimiter struct {

11 requests int

12 limit int

13 window time.Duration

14 mu sync.Mutex

15 resetTimer *time.Ticker

16}

17

18func NewRateLimiter(limit int, window time.Duration) *RateLimiter {

19 rl := &RateLimiter{

20 requests: 0,

21 limit: limit,

22 window: window,

23 resetTimer: time.NewTicker(window),

24 }

25 go rl.reset()

26

27 return rl

28}

29

30func (rl *RateLimiter) Allow() bool {

31 rl.mu.Lock()

32 defer rl.mu.Unlock()

33

34 if rl.requests >= rl.limit {

35 return false

36 }

37 rl.requests++

38 return true

39}

40

41func (rl *RateLimiter) reset() {

42 for range rl.resetTimer.C {

43 rl.mu.Lock()

44 rl.requests = 0

45 rl.mu.Unlock()

46 }

47}

48

49func (rl *RateLimiter) Middleware(next http.Handler) http.Handler {

50 return http.HandlerFunc(func(w http.ResponseWriter, r *http.Request) {

51 if !rl.Allow() {

52 log.Printf("Rate limit exceeded for %s", r.URL.Path)

53 http.Error(w, "Rate limit exceeded", http.StatusTooManyRequests)

54 return

55 }

56 next.ServeHTTP(w, r)

57 })

58}

59

60func main() {

61 limiter := NewRateLimiter(10, time.Minute)

62

63 http.Handle("/", limiter.Middleware(http.HandlerFunc(func(w http.ResponseWriter, r *http.Request) {

64 w.Write([]byte("Request successful!"))

65 })))

66

67 log.Println("Server started on :8080")

68 log.Fatal(http.ListenAndServe(":8080", nil))

69}

The above rate limiter is now based on a global request counter rather than IP-based tracking, making it simpler for demonstration purposes.

Future improvements #

For future improvements, I plan to:

- Set up a circuit breaker – To prevent overwhelming backends that are failing or underperforming by temporarily stopping requests to them.

- Explore different routing strategies, like weighted round robin or least connections, to optimize request distribution based on backend capacity.

- Dynamically update the backend servers list – Automatically remove any failing servers from the list and add new ones without downtime.

- Implement session persistence, ensuring that users consistently connect to the same backend during their session.

- Cache requests to reduce load on backends and improve response times for repeated requests.

See the github repository.